Integrating advanced technologies like AI and machine learning into your research is not just a trend but a necessity.

At Labguru, we are constantly investigating the integration of new technologies to simplify and enhance lab operations and data management processes. So, where does Generative AI fit in our technology stack? And where can it save you time/resources?

In this blog, we demonstrate how to harness AI to manage increasing volumes of scientific data by enriching your Labguru entities using a Generative Pre-trained Transformer (GPT) for Named Entity Recognition (NER).

Advantages of Utilizing GPT alongside Labguru

GPT (Generative Pre-trained Transformer) is an advanced AI language model developed by OpenAI. The model works to understand and generate human-like text based on training on a diverse and extensive dataset.

GPT's ability to process and interpret large volumes of text makes it a powerful tool in natural language processing tasks, including Named Entity Recognition, which is pivotal in handling complex scientific literature.

Named Entity Recognition (NER) is a crucial aspect of natural language processing (NLP) focused on identifying and classifying essential information in text. It involves detecting specific entities, such as names of people, organizations, locations, and technical terms relevant to scientific discourse.

By leveraging GPT's advanced language processing capabilities, we can use it directly for Named Entity Recognition (NER) in scientific texts. This approach allows GPT to identify and extract essential information such as specific terminology, data points, and relevant entities. This direct application of GPT for NER tasks simplifies organizing and managing scientific data, enhancing efficiency and accuracy in research data management.

Practical Application: Extracting Keywords and Tagging in Labguru

Step 1: Fetching a Paper using Labguru API

First, let's fetch a scientific paper from your Labguru workspace.

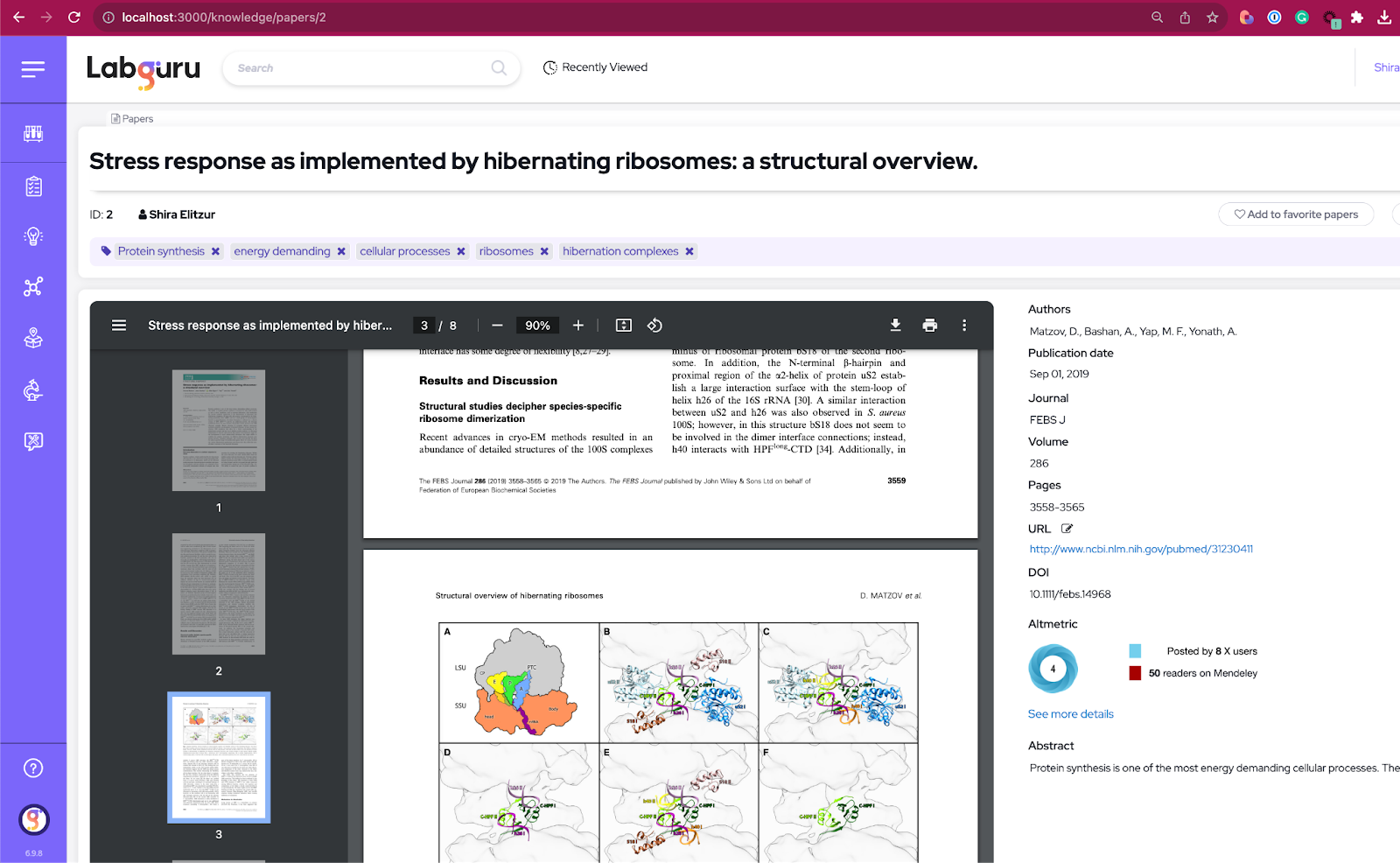

Below is a screenshot of the paper I am interested in from my Labguru workspace:

Here's a Python code snippet to fetch the paper entity from Labguru’s API:

import requests

import json

# Authenticate to get your Labguru token

LABGURU_BASE = 'https://your_labguru_domain'

auth_url = f"{LABGURU_BASE}/api/v1/sessions.json"

credentials = {'login': 'your_email', 'password': 'your_password'}

auth_response = requests.post(auth_url, json=credentials)

auth_data = json.loads(auth_response.text)

LABGURU_TOKEN = auth_data['token']

# Replace with the id of the paper you wish to fetch

paper_id = 2

# Fetch a specific paper

url = f"{LABGURU_BASE}/api/v1/papers/{paper_id}"

payload = {'token': LABGURU_TOKEN}

response = requests.get(url, payload)

paper = response.json()

print(paper)

Step 2: Extracting Keywords with OpenAI's GPT

Next, we use OpenAI's GPT for NER to extract keywords from the paper's abstract:

import openai

OPENAI_KEY = "your_openai_api_key_here" # Replace with your OpenAI API key

OPEN_AI_BASE = "https://api.openai.com/v1"

# Let's organize the input for the completions task

instructions = """

Your task is to provide a list of up to 5 tagging options to tag a given

text in an ELN system.

A tag can be a 1 word term/phrase and maximum 2 words for 1 tag.

Response format would be a JSON of an array of tags as strings -

["tag_a", "tag_b",...]

"""

prompt = f"Extract keywords from this text: {paper['review']}"

payload = {

"model": "gpt-3.5-turbo",

"response_format": { "type": "json_object" },

"messages": [

{"role": "system", "content": instructions},

{"role": "user", "content": prompt}

]

}

headers = {

'Content-Type': 'application/json',

'Authorization': f"Bearer {OPENAI_KEY}"

}

url = f"{OPEN_AI_BASE}/chat/completions"

response = requests.post(url, headers=headers, data=json.dumps(payload))

tags = response.json()['choices'][0]['message']['content']

print(f"Suggested Tags for the paper -> {tags}")

tags = json.loads(tags)['tags']

# output:

# > Suggested Tags for the paper -> ["Protein synthesis", "energy demanding", "cellular processes", "ribosomes", "hibernation complexes"]Step 3: Adding Keywords as Tags in Labguru

Finally, let’s add these extracted keywords as tags to the paper in Labguru:

url = f"{LABGURU_BASE}/api/v1/tags"

for tag in tags:

data = {

"item": {

"tag": tag,

"class_name": paper['class_name'],

"item_id": paper['id']

},

"token": LABGURU_TOKEN

}

response = requests.post(url, json=data)

print(f"New Tag '{tag}' was added for paper #{paper['id']}")

# output:

# > New Tag 'Protein synthesis' was added to paper #2

# > New Tag 'energy demanding' was added to paper #2

# > New Tag 'cellular processes' was added to paper #2

# > New Tag 'ribosomes' was added to paper #2

# > New Tag 'hibernation complexes' was added to paper

And here are the new tags in the Labguru paper:

Benefits and Future Insights

This integration offers several benefits:

- Efficiency: Automating the extraction and tagging process saves valuable research time.

- Data Organization: Enhanced keyword tagging leads to better organization and searchability within Labguru.

- Scalability: While doing these steps manually for 1-2 papers seems very easy, this method can be automatically applied to a large number of documents, aiding in large-scale data analysis.

Looking ahead, the possibilities are vast. From further refining the NER process to integrating more complex AI models for deeper data analysis, the potential for enhancing Labguru with AI is limitless.

Conclusion

By combining Labguru with the power of GPT and LLM, you unlock a new level of efficiency and organization in your scientific research. We hope this guide inspires you to explore the potential of AI in your data management processes.

We’ve recently introduced our latest innovation, Labguru Assistant, an advanced AI chatbot powered by OpenAI or in-house LLM, designed to empower scientists like you to navigate the complexities of modern research with unparalleled ease and efficiency.

To learn how you can streamline and enhance the efficiency of your research workflows with Labguru Assistant -

%20(4).png)